The Power of Internal Links

Each page on the web has its own history, purpose, strengths and weaknesses. The task of SEOs is to be able to analyze these four components and, based on them, to create a strategy that aims to optimize these pages and rank them or place weight on another page to rank. Of course, this seems like a simple task in theory, but when we have mega structures and websites with over 50,000 pages, the task becomes more complicated.

In this material we will try to develop several different scenarios and approaches according to their type. We will start with a brief introduction and definition of the basic terms, but look at how we should get started on a project with a small number of pages.

What are internal links?

Internal links are hyperlinks that link the pages of a web site to one another, using a different set of anchor texts. This directly affects the weighting of one page to another (Pagerank internal). It is argued that a better strategy is to use a variety of anchor texts.

In addition, they serve as internal navigation within the website, assisting UX (user experience), which in turn influences other behavioral factors in a single website. Before embarking on any kind of internal connection, you need to be well prepared to make informed decisions.

Connectivity ANALYSIS OF THE USE OF INTERNAL CONNECTION TO INTERNAL CONNECTION WITH SEPARATION IN TYPES horizontal and vertical BASED CHESTOTNOSTTA and type repeatability PAGE TO PAGE and the relevance of Intent Give and Take page.

– NIKOLA MINKOV – FOUNDER & CEO AT SERPACT

Definition of Pagerank

The inner and outer Pagerank are often confused. The foundation is laid by the external Pagerank created by Larry Page at Stanford University back in 1998, when they laid the foundations for Google. This Pagerank is a fully Google internal algorithm that has a scale from 0 to 10 that measures the authority of a domain or a page. In 2016, Google announced that they had officially stopped using Pagerank, but a number of other theoretical publications proved that this was not the case.

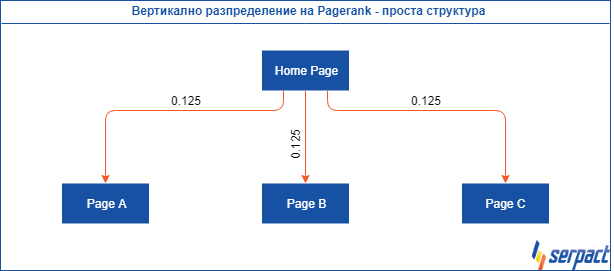

In today’s material, we will look at the so-called Pagerank for internal links, which has a very strong influence in any SEO campaign, if we do not take into account single page websites. There is no official Google data on the exact distribution of power from one page to another, but in most of the Serpact projects analyzed, we conclude that the average weighting of one page through a hyperlink to another page is 0.125 .

Anchor texts

The calculation of anchor texts in internal links is a very important point, but it is good to compare the number of links to the relevant page. It is possible and likely that overdoing such links or rotating anchor texts will reduce the visibility of your Google page and lose traffic. Calculate the page-to-page ratio, phrase-to-phrase repetition, and analyze the top 3 competitors in the niche to make sure you don’t go over the so-called Tress Hold.

To perform such complex analysis, you need to use a tool for the purpose such as SEMrush or ScreamingFrog. You will then need a number of calculations in .excel or Google Sheet, including specialized command handling (in some situations).

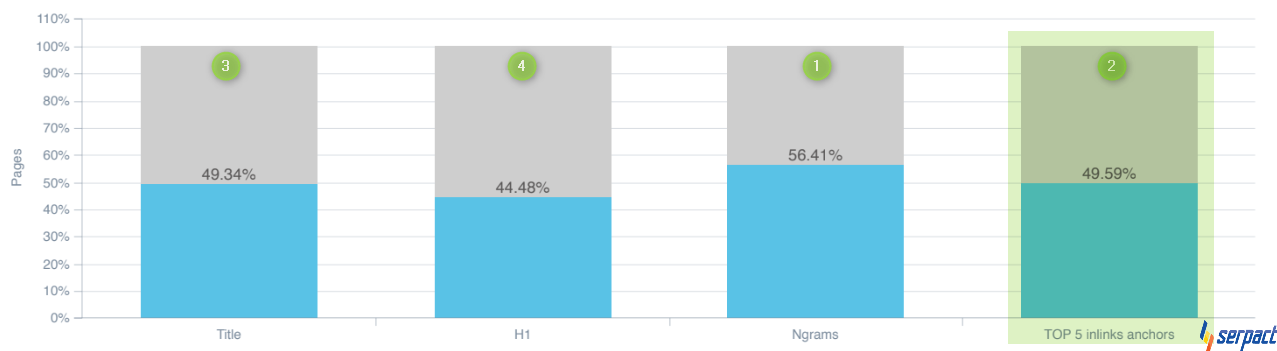

Let’s also see our example, comparing the analysis of: title, H1, Ngrams, Top 5 inlinks anchors in the study. Watch and track the power of inlinks anchors where they rank, right after the Ngrams, even above the title tags. And not by chance, we would add, because it is a very, very powerful tool with direct influence in SERP.

Simple page architecture

Before we start with the standard page architecture, we should add that internal links are calculated vertically and horizontally. The difference is that the verticals are calculated based on the navigation in the header and footer of the website, and the horizontals cover the other elements. It is a good idea to divide them so that they do not enter our analysis of vertical data, to calculate them, or at least to divide them so that we can determine the differences between the two. Also, the verticals in question are, in most cases, almost always valid throughout the website.

Here is an example of Vertical Structure:

Let’s see an example of a Horizontal website structure that includes all internal links in body, Breadcrumbs, images and Sidebar navigation. The major difference here with the vertical structure is that it has radically different data, and in it much of the optimization of internal connections hides the success of the task. Knowing that horizontal analysis is more important, let’s look at what a simple such structure would look like. Before we begin our review, it should be noted that every page on a website puts weight on all pages of the verticals, because in almost all cases a website uses its navigation (menu) in the header and footer of the entire web site the same way.

Complex Page Architecture

The question raises three main tasks for:

- Navigation of mega structures (websites)

- Distribution of their internal connections

- Their vertical distribution

Certainly, when we read this question, we were extremely excited to create this publication, but in order to be as relevant as possible to the issue we had to look at our internally successful projects, otherwise everything would be theoretical, and Serpact is an SEO agency practicing knowledge.

Horizontal vs. vertical structure on large websites

As we have learned so far, horizontal structure is more important than vertical structure, but let’s analyze whether it is true for mega projects that have over 50,000 pages. The answer may surprise you, but with large online stores, the important structure is the vertical structure and we will prove it.

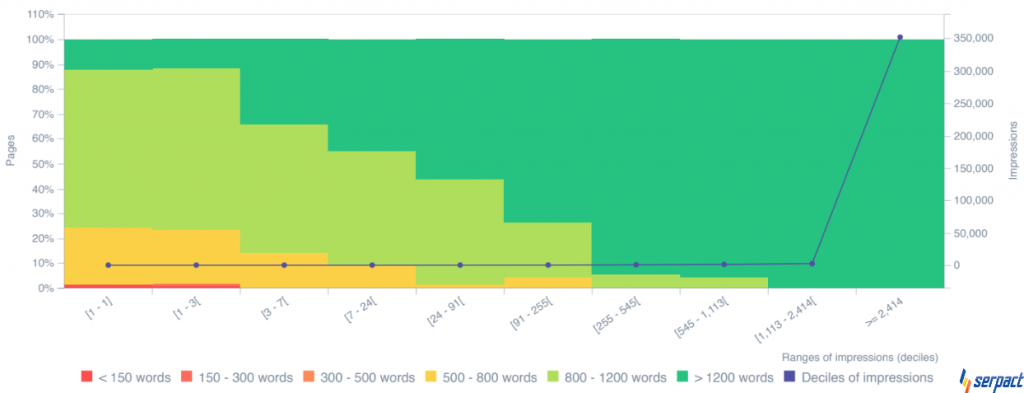

In the image, we extract data from pages with a certain volume of content and how many impressions are driving against it. As you can see in this project, pages with 800 to 1200 words and pages with more than 1200 words capture the most impressions. What this means? This means that we do not have enough content on all the pages we want to rank, so below we create a task for the purpose related to products that are content in the category listing, right?

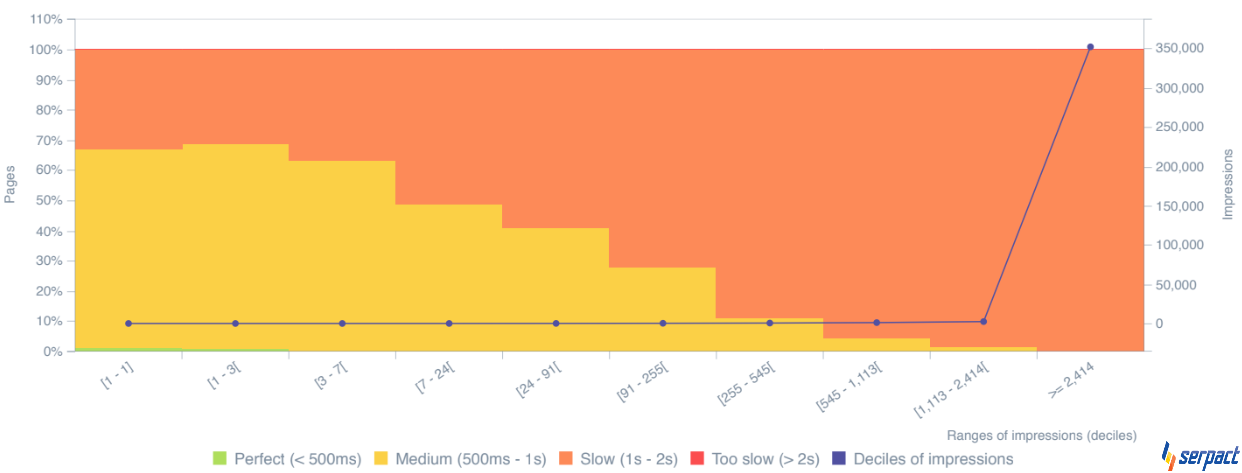

Of course, over-loading products is not always the optimal solution for a single page because it would reduce its loading speed, but let’s also turn to this method of calculation to be sure.

Let’s list the types of pages in a medium-sized online store:

- Homepage

- Product listing

- Product listing filtration results

- Product page

- Static pages

- Shopping cart

- Check Out

- 404 pages

- Profile

- Images

- Dynamic content type blog

As you can see, we have several basic types of pages in one online store and each one generates internal links horizontally or vertically. It is important here to distinguish between pages that are indexed by Google and play a role in the internal Pagerank calculation. We should deliberately arrange for Google to index pages such as: profile, basket, cart, etc.

Page Types Ratio

We cannot do this analysis unless we determine the ratio of product category pages, the number of product pages, the static content as the pages: about us, delivery, payment, etc. We need to think separately and take into account a dynamic section of the web site, such as a blog or any section in it. For small websites, these pages play a significant role and make the horizontal stronger than the vertical, but as mentioned in large stores, this is not the case because of their proportion.

First, we need to consider whether or not filter results are useful to us on Google results. In most big-store strategies, some of that filtration is left in the SERP, but for smaller stores, this would be detrimental, because of the so-called orphan pages, or 404 statuses known in the new GSC (Google Search Console) Soft or sent to Google but not currently indexed.

Big stores have so-called comforts, and it’s no problem for Google to crawl a few million more results, but they also do their bit, and even if we turn to one of the world’s leading online retailers, we will notice that Amazon is limiting Google’s bot via its robots .txt. By organizing the bot, they reduce the number of internal connections and their calculation is simplified, if we can say at all that such a shop has a simple structure of calculation.

Another possible method is to limit the bot to calculating the weight of these pages by putting a noindex tag in the head, but this will completely stop indexing these pages, and that is not our ultimate goal. Another interesting approach is to put a nofollow tag in the head again, which can do the same job as the robots.txt configuration, but it’s all nice to test and measure it constantly in GSC.

There are theories and practices in online stores that do not have a large number of pages that build so-called. SEO freindly filter URLs increase the number of SERP results and, on the basis of this, improve intentions per request per request, or simply the relevance of these sites to Google queries is better due to the higher number of web served results -the site, as well as the more specific specification formed by the qualifications in the filter: color, size, etc.

Ratio and distribution for products and categories

This is probably the most valuable analysis we have to do after the restrictions we set to filtering on a website through robots.txt. Return the entire SEO project (we call it restart in Serpact) so that we can use Google data. Let’s ask ourselves the questions:

- Why do we have 500 categories on the website?

- How many of them have Search Volume?

- How many of them have a good rank for us?

- Which ones have a good (minimum) number of results in their category?

We start with KWR (Keywords Research)

If we do not know the number of searches for each semantic core, then how did we create the Sitemap (categories) on the website? In most cases, online stores do not look for an SEO agency or specialist in building the categorical structure of the website, which will prove to be absolutely wrong. Assuming we already have such a structure in place, then with the new KWR we have to compare the current structure with the optimal one recommended by the SEO agency.

Knowing the total number of searches for each semantic kernel, we can look to see if we have such a category on the site and compare their correlation. If we have one we will add it, if we have one without Search Volume we will explain below what to do with it. However, the task is not so elementary, because we are currently looking at the situation and there are the following scenarios and cases:

We have a category created, but it follows the wrong hierarchical structure of the web site and is not relevant to the semantic core

This is a case we can deal with relatively easily. To do this, we will need to move the category to the appropriate hierarchy. However, it would be important to know if the categories on the website follow a hierarchical structure of the addresses on the website, because in such a situation we will have to check for all the child categories of the category and make a TOR (technical assignment), and implement a 301 redirect for each change.

We have a wrongly formed top-level structure in the category hierarchy of the website

This is a very serious case, which is solved by completely changing the category structure of the website, but only if these categories, sub-categories, etc. don’t have a good enough ranking. It is necessary to create a TK to compare and compare this data, as well as to create an exact Sitemap and instructions for type 301 redirects. to pull out the correct hierarchical structure, but this does not help in any way of SEO optimization, on the contrary, it harms.

We have an intermediate category that is not present in the hierarchy, so the relationship between the previous and the next category is lost.

The solution to this case may be the most favorable solution, but it depends on the URL hierarchy of the store. Assuming that no flat hierarchy is used, then we will have to change and make 301 redirects only to the categories under the new (intermediate) category. Of course, this approach is for the wrong hierarchical distribution and not the use of a gender division filter. In many niches, it is recommended that the gender breakdown is not in the filter, but in the category hierarchy because of the large Search Volume.

Using the KWR data and comparing our hierarchy in the categories, we can draw the appropriate conclusions and try to create a TK for their correction, in one of the three scenarios mentioned. Maybe here comes the moment to defend our thesis with data, here’s how ..

Ranking

It is clear to everyone that better ranking means higher organic traffic and conversion opportunities. Knowing which are semantic cores and their cores with high search volume and which are not as sought after as user expectations, we can divide our categories into two main groups, with sub groups by qualifications, here’s how.

We split all categories of the website into those with more than 50 searches per month (depends on the niche and average Search Volume for that site) and those below 50 searches per month. Then we add a second condition to filter the categories of those with less than 20 products in the category and those with more. In the example, we have the remaining 50% of the categories on the website that meet both conditions. The website has over 500 categories and has a complex structure, like over 50,000 product pages.

Two essential questions arise:

Why are we filtering categories with a certain minimum of searches per month?

Let’s say, as in the example, that 25% of all our categories have a minimum Search Volume per month. Let’s ask ourselves, why do we need these categories and pages to be indexed? The answer is very clear, we do not need it.

A large number of online stores mainly sell 10% of their range and in 99% of cases this range has a high Search Volume. If we do not optimize the Crawl budget of the website and do not give weight to those pages that are visible to convert, what should we do SEO for the sake of image that we rank phrases with low or no Search Volume?

As you reflect on the above, you may be wondering what the connection is between these limits and internal links, but we hope you have remembered that noindex pages are not involved in the distribution of internal links. By restricting the bot to crawl them, we reduce their pagerank and power through the vertical, which is stronger in online stores with a large number of pages. However, we keep them in the navigation of the website to have good UX for the users.

Why filter categories with a certain minimum of products?

We wrote above that it is extremely important for Google to find a variety of content on each page, and that would be the case if we had a variety of products in the categories. Perhaps few know that products in categories are content and Google treats it as such. This is where the power of big stores like Amazon, eBay, Alibaba comes in, not dominating the generated content through a blog, but by the number of results (products) in a category, thus covering Google’s goals, namely to provide the most relevant result for each query . Definitely numbers play a significant role. We also protect ourselves from orphan pages, soft 404, which is another serious case in SEO.

Conclusion and result

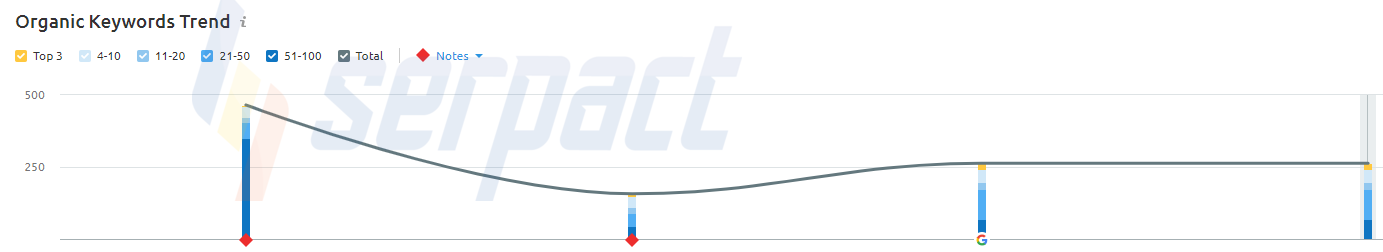

This is data for a project that, after implementing the formula described above by the criteria and creating redirects and a new TK hierarchy, realized a decrease in the number of keywords from nearly 500 to 250 phrases in less than 3 months after its implementation (decrease by 50%). Already over 80% of the phrases fall into the exact category and the relevance is tight. We were able to increase the current rank as well as increase the traffic for higher value phrases and conversions, ultimately adding weight to landing pages with restriction and proper distribution of internal vertical links.

- We have risen sharply up to the top 3 positions

- We have raised the positions from top 4 to top 10

- By doing these two points, we have increased organic traffic because these are phrases with a large Search Volume

- As a result, we also increased store conversions, making your traffic more targeted

- We cleared over 90% of harmful phrases that carried neither rankings, traffic, nor conversions

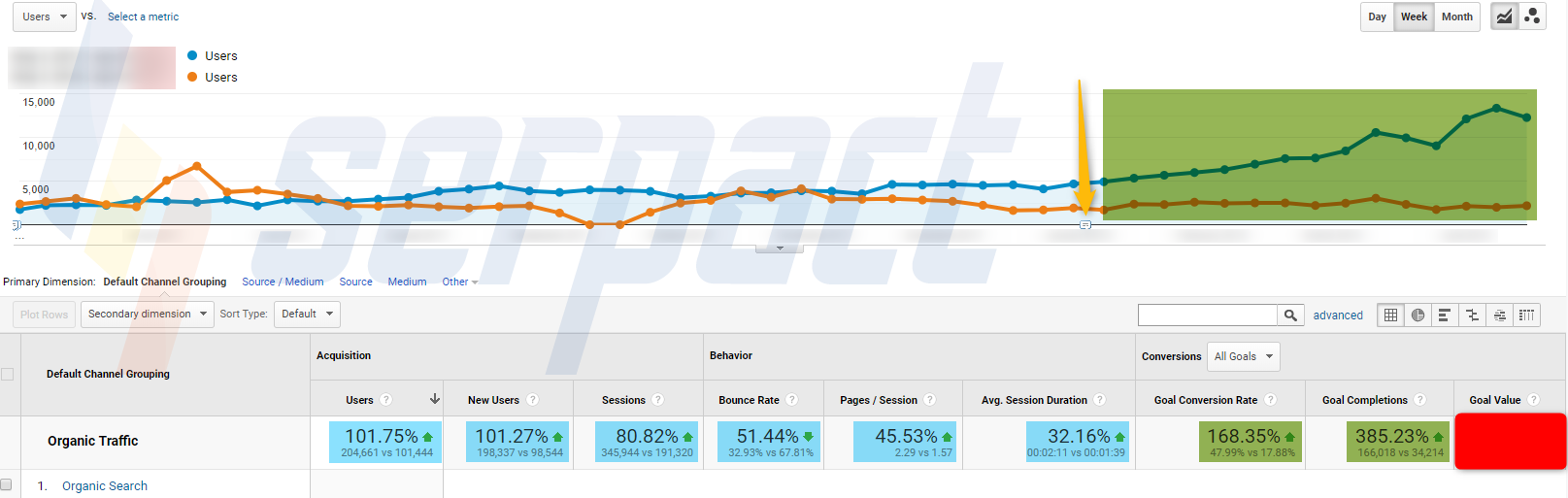

Here is the GA (Google Analytics) data, where we can see that two months after the conversion, organic traffic starts to grow, reaching from an average of 5,000 organic visits per week to 12,500 organic visits per week, although we reduce the number of phrases we rank, but we’re improving the ranking of those with higher Search Volume.

Also, the customer is happy now because they see that conversions are growing by over 385% compared to last year, only through organic traffic. These are some good results in Serpact’s successful case study.